Classification Model of Predicting Movie Ratings Prior to Being Released

Predicting Movie Ratings Prior to being Released

What factors determine a high-rating for a movie? This analysis investigates features that can predict whether a movie will be a high or low rating prior to being released. Under this scenario, there is a market where streaming services can buy movies prior to being released, essentially buying low before the movie’s performance is realized. If the transaction costs are high, then the optimal move would be to limit Type I error, that is minimizing false positives. False Positives are when the model predicts an outcome (such as True/1) when it is not (False/0). This is analogous to buying “lemons”. If the transaction costs are low, then minimizing Type II error (False Negatives) would be the strategy. False Negatives are when the model predicts False/0 when it is actually True/1. This scenario will fall under the former category, minimizing Type I error.

Using code to scrape IMDB website, movies will be scraped to collect information about the movies. Another scrape will go to each movie’s url on IMDB to scrape budget, production companies, creators etc.. The code for the main scrape came from Amer Shalan, which can be found [here

The jupyter notebook can be found here.

Data

The main scrape was for approximately 10,000 movies, and then it was filtered by movies released in the year 2000 and later. Then those movies were scraped to collect information on budget, rating, production companies, and creators. Only those movies with positive budgets will be used in the model.

The features used in the models are:

- Runtime

- Budget

- Actors

- Directors

- Genres

- Source (Screenplay/Novel)

The next section will talk about feature engineering

Feature Engineering

Actors

Actors were binned into the following categories: super actors, top actors, mid actors, bottom actors. In this first iteration, the above will be used. In later iterations, time effects need to be involved, because a top actor in early 2000s, but not necessarily the top actor now. Leonardo DiCaprio is a top actor now, but not in the early 2000s.

Directors

Directors were binned into the following categories: Super Director, Top Director, Mid Director. In this iteration, the above will be used. In the later iterations, time effects will need to be involved just like for actors.

Production Companies

Production Companies was binned into Big Six or not. The Big Six companies are:

- Warner Bros.

- Columbia Pictures (Sony)

- Walt Disney Studios

- Universal

- Paramount

- Twentieth Century Fox

Novel/Screenplay

Movies were binned into:

- Novel

- Screenplay

There were other types such as books and comics.

Genre

There were many genres such as Drama, Comedy, Action, Adventure, Thriller etc… Many overlapped, so the two dominant genres were used:

- Drama

- Comedy

The other genres were all grouped together, and omitted.

Models

-

Decision Tree

-

Random Forest

-

Extra Trees

-

Gradient Boosting

Results

Iterating through ratings between 6.4 (median and mean) and 8.0, it was found that 7.4 resulted in the highest precision for Random Forest Model. At a rating of 7.1, the Random Forest model has a slightly worse precision and a higher recall score, but also that is the rating where Gradient Boosting model precision score is maximized. Depending on whether minimizing Type I or Type II error is the goal, different ratings might be used to split. Since Type I error is going to be minimized, rating will be split at 7.1.

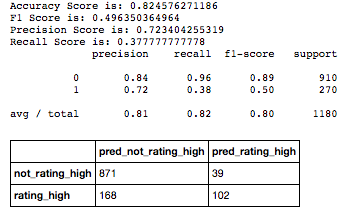

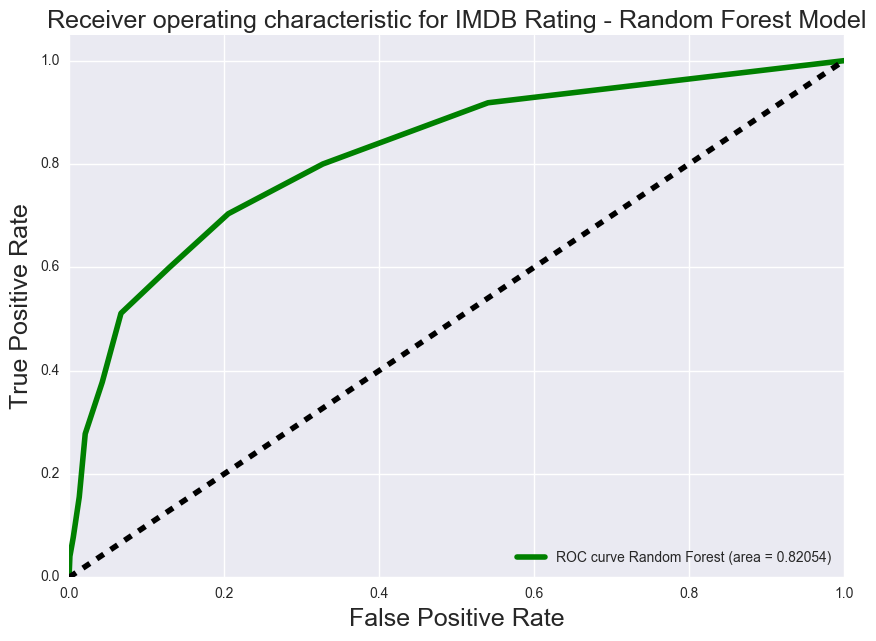

Random Forest Model

Since the goal is to minimize Type I error, so the split between high rating and low rating will be 7.1. The following are the results:

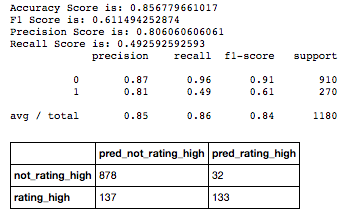

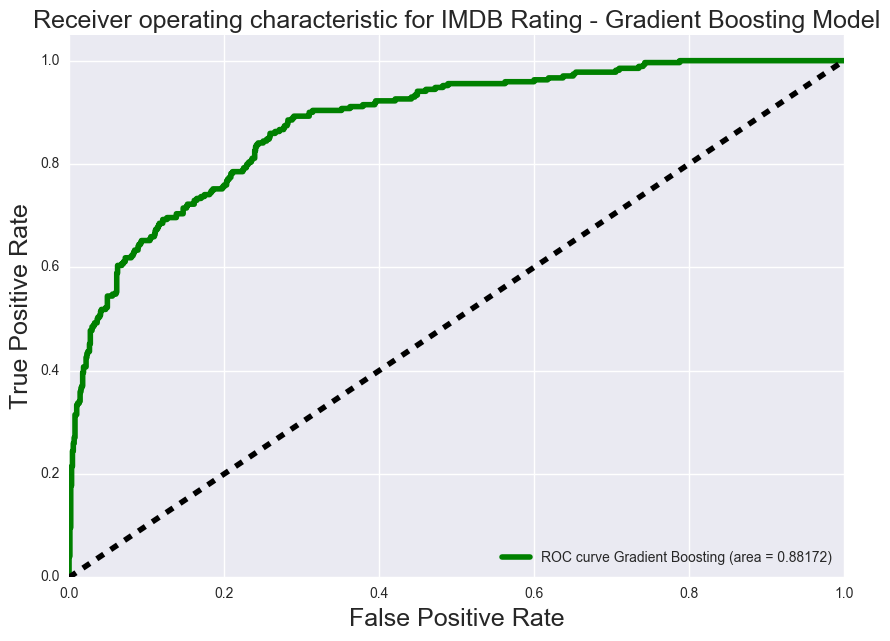

Gradient Boosting Model

Since the goal is to minimize Type I error, so the split between high rating and low rating will be 7.1. The following are the results:

In both models, runtime, budget, and year were the top three most important features. The next several features involve actors and directors. Budget and Big Six production companies might be correlated as the Big Six production companies generally have higher budget than non Big-Six production companies.

Conclusion

Gradient Boosting precision score results in 0.81. This is by far the best score with the result being to minimize Type I error. Random Forest gave an precision score of 0.72. In order for this analysis is more robust, directors and actors need to be re-evaluated because actor and director rankings change over the years. That would be the next steps.